Vertical AI Requires Vector Databases: How to Design and Scale an ML Platform Capable of Vertical AI

Pienso integrates with Qdrant for vertically scaling the power of auto-regressive models.

Why vector databases matter more than ever

Perhaps the most valuable application of vector databases is their unique ability to improve the accuracy of large language models (LLMs), or foundation models — particularly in terms of reducing hallucinations, the unfounded claims generated by these models. This advantage is brought into focus when considering the relationship between vector databases and the extensive context windows managed by advanced LLMs. Context windows are the frames of reference that enable the model to understand the meaning and context of a specific piece of text.

A vector database serves as a robust reservoir of high-dimensional vectors, mathematical representations of features, attributes or relationships buried in text data. By tapping into these vectors, LLMs produce concrete, database-backed responses, rather than leaning solely on patterns identified during the training phase. This results in more grounded, factual responses that resist the tendency for hallucination.

Better predictions power stronger results to downstream tasks

The utility of vector databases is enhanced when combined with the large context windows of LLMs. With that extended context, an LLM can consider a broader swath of the conversation or document, making more accurate and contextually relevant predictions for downstream tasks.

How it works

When a model with large context windows encounters a prompt, it can scan the vector database for pertinent vectors associated with the input and its context. It identifies relevant vectors based on similarity measures such as cosine similarity, and then uses this information to guide the text generation process.

This system blends the data-backed accuracy provided by the vector database with the contextual sensitivity offered by the large context window, ensuring more factual, contextually relevant, and consistent output, which significantly reduces the possibility of hallucinations.

Why Pienso chose Qdrant

After a thorough evaluation of high-performing vector databases, Pienso selected Qdrant for its best-in-class LLM interoperability. Our partnership makes it easier for commercial organizations to trust the results they generate, which is key to unlocking the potential of interactive deep learning.

Our partnership makes it easier for commercial organizations to trust the results they generate, which is key to unlocking the potential of LLMs and interactive deep learning.

Compared to other vector databases, Qdrant stood out in four distinctive areas:

- Efficient Storage

Qdrant’s storage efficiency is a standout feature. In our benchmark, we found that Qdrant could store 128 million documents, consuming just 20.4GB of storage and only 1.25GB of memory. This efficiency will provide substantial hardware cost savings while ensuring that the system remains responsive, even when handling extensive data sets. - Distributed Deployment

Qdrant supports a distributed deployment mode where multiple Qdrant services communicate with each other to distribute data across peers. This approach significantly increases storage capabilities and stability. This method of extending storage and increasing stability is a key enabler for working with massive data sets often seen in LLMs and deep learning. - Written in Rust

The choice of programming language is a significant aspect when considering the performance and safety of a database. Qdrant is written in Rust, a programming language known for its speed, memory safety, and concurrency capabilities. Rust provides Qdrant an edge in ensuring high-performance operations with robust data protection. - Memmap

Vector databases, or vector search engines as they’re sometimes called, are designed specifically for storing and searching high-dimensional vector data, a form of data representation common in machine learning models. This focus makes them an indispensable tool for achieving efficient and rapid retrieval in systems that engage with large-scale machine learning models. Qdrant supports memmap storage, a feature that provides fast performance comparable to in-memory storage. This capability is critical in a machine learning context where rapid data access and retrieval are required for training and inference tasks.

By building on Qdrant, Pienso expands access to LLMs, making interactive deep learning practical and practicable to a broader range of users building a more diverse set of use cases.

Taking Pienso + Qdrant to market

How will building with a high-performing, open source vector database benefit our customers and strengthen our value proposition with partners?

Every commercial generative AI use case we encounter benefits from faster training and inference, whether mining customer interactions for next best actions or sifting clinical data to speed a therapeutic through trial and patent processes.

- Streamlined search speeds results that power AI return on investment

Vector databases excel in their ability to perform efficient nearest-neighbor search, a critical function in interactive deep learning. Given the complex and high-dimensional nature of data in LLMs, finding similar vectors—or the “nearest neighbors”—can be a computationally expensive task. Through smart indexing and partitioning methods, vector databases greatly enhance the speed of these nearest-neighbor searches, accelerating both the training and inference process for users. - Future-Proof Your Models No Matter How Large Your Data Set Grows

Qdrant is inherently designed for scalability. Increasing data volumes, an inevitable reality, will not compromise performance. The ability to efficiently manage growing data volumes is crucial for a database working in machine learning processes, where data not only grows exponentially and rapidly, but this data only enriches the model. - Run On Prem or In the Cloud Without Managing Another Environment

Qdrant plays nicely on bare-metal, which means Pienso integrates Qdrant into our stack — eliminating the need for enterprise customers to manage an additional environment outside of Pienso. This benefit applies to our cloud offerings, too. Avoiding database environments that are managed outside of our platform supports data sovereignty and autonomous LLM regimes, maintaining a customer’s full span of control. - Code-Level Data Persistence Enhances Data Safety and Restorability

Thanks to Qdrant’s implementation in Rust — a language celebrated for its memory safety guarantees — users can expect robust data protection. Additionally, Qdrant employs write-ahead logging (WAL), ensuring that changes are safely logged before being applied to the database, providing additional layers of data safety.

What’s next: Delivering model-autonomous, hallucination-free interactive AI

The partnership between Pienso and Qdrant delivers a combination of no-code/low-code interactive deep learning with efficient vector computation engineered for open source models and libraries.

Bolstered by the power to swiftly and efficiently retrieve similar vectors from a high-dimensional space, Pienso’s deep learning solutions powered by Qdrant ensure quick and seamless interactions for organizations training their own LLMs for downstream tasks that require data sovereignty and model autonomy.

Qdrant vector database enables the development of new kinds of applications on top of unstructured data of any kind — text, images, audio, and videos. We’re proud to partner with Pienso to equip their ML platform to turn text data into insights regardless of the end users’ programming ability.

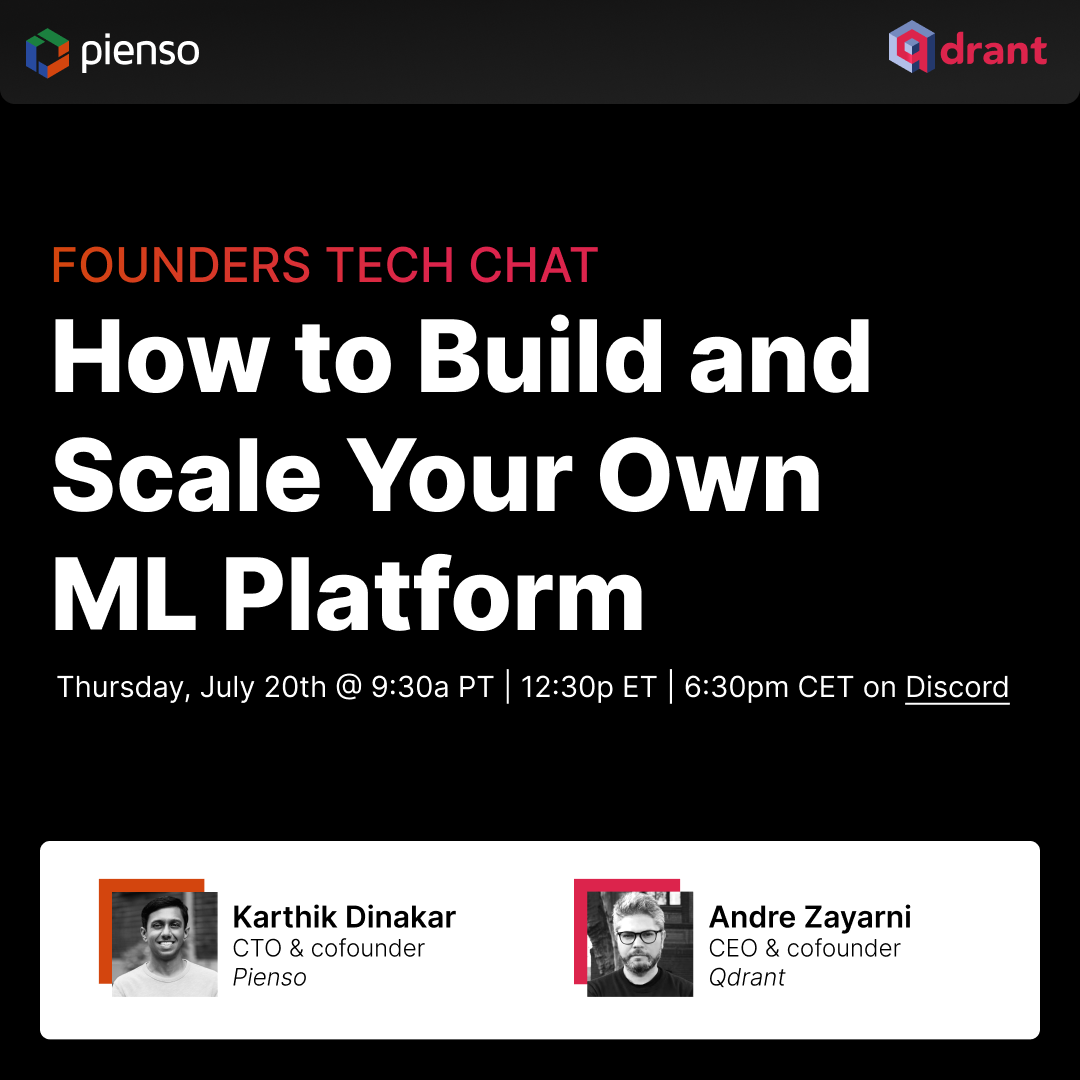

Learn more about why we chose Qdrant in our Founders Tech Chat on Discord, July 20th.