Our user-friendly interface is your invitation to explore.

Where other platforms start with big promises and fail at the gate – often requiring costly customisation - Pienso enables you to go as far as curiosity leads you.

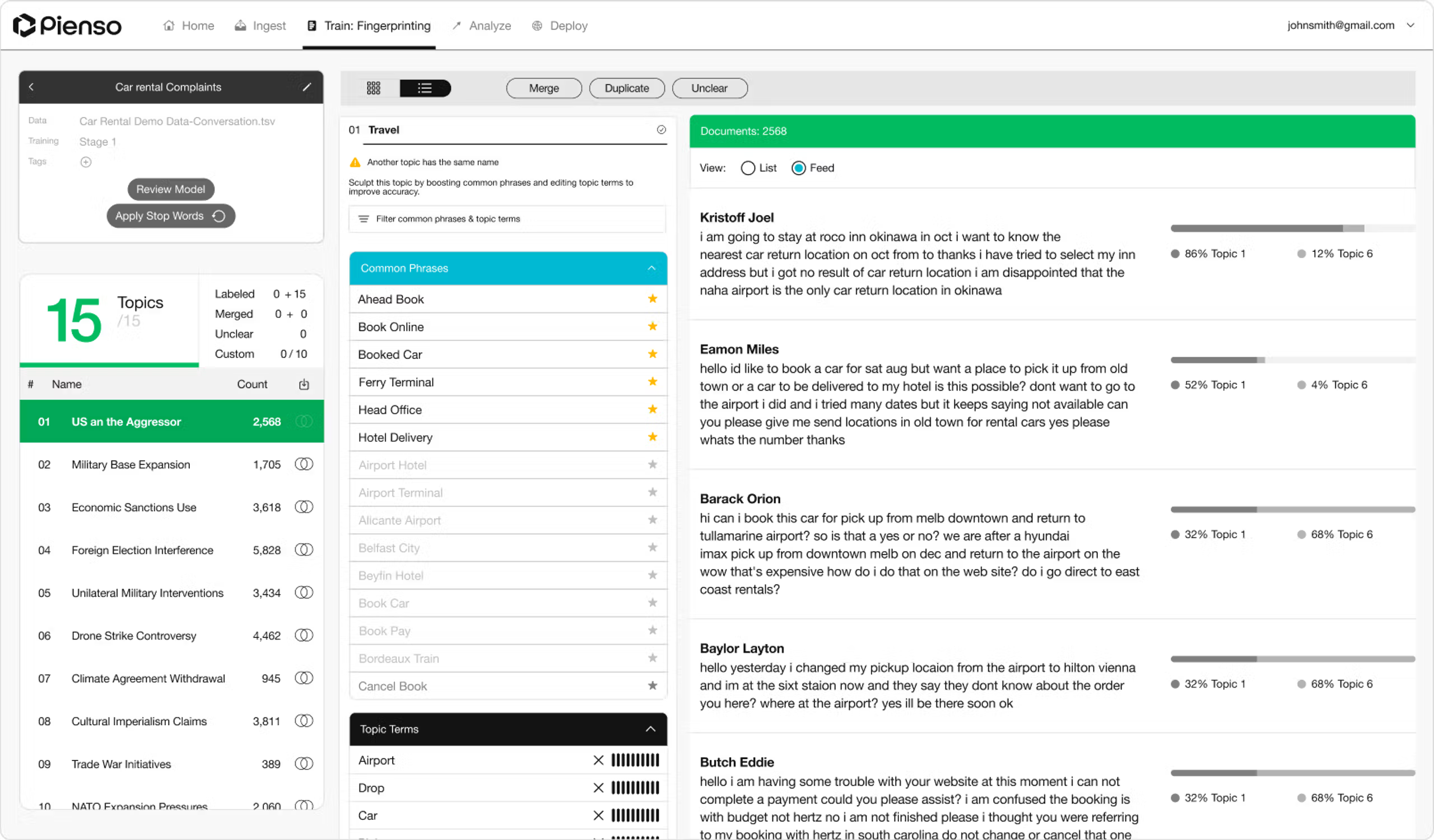

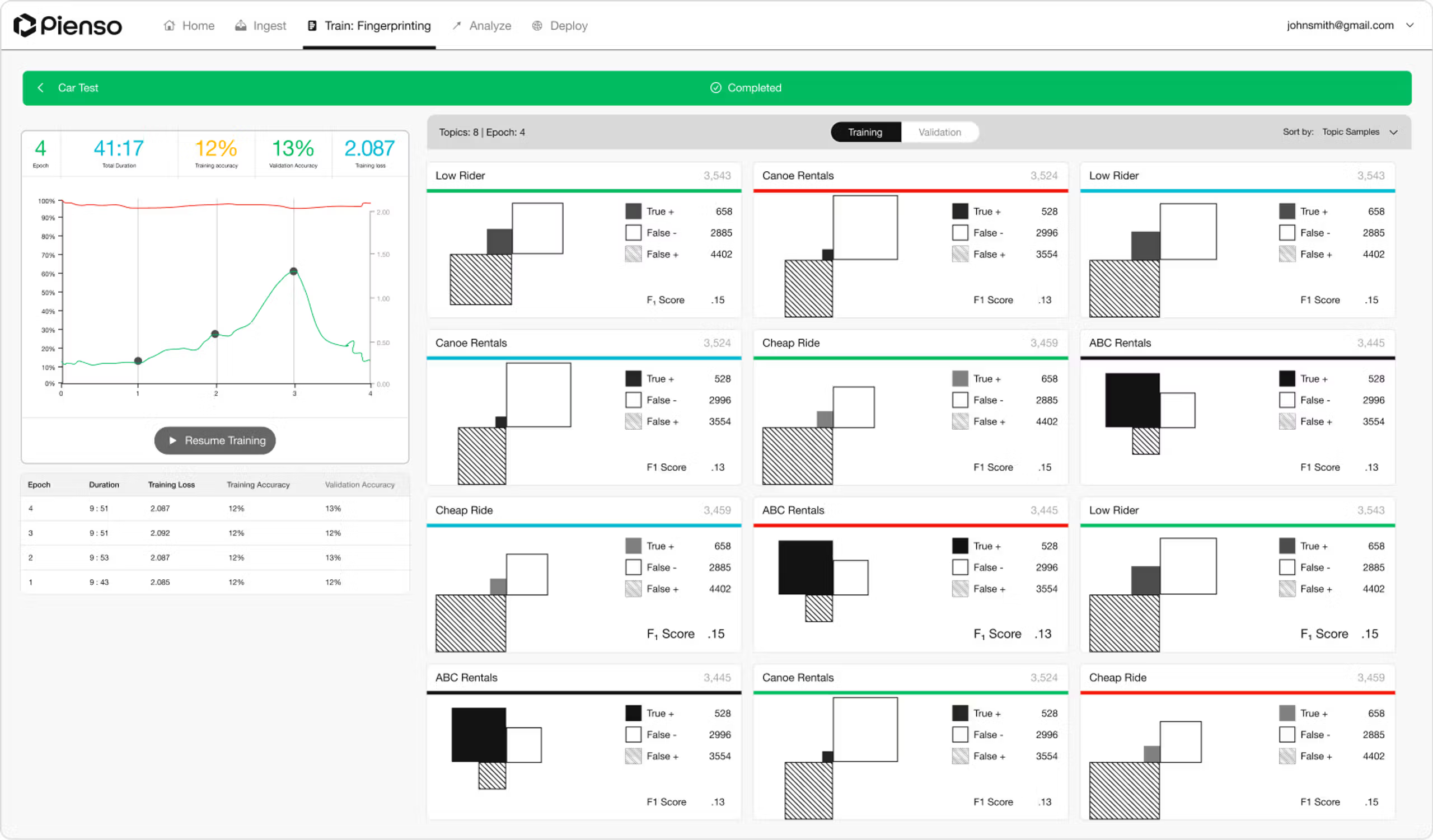

UI for AI that puts you in control.

When specialists rely on data scientists to label their data and build models, the nuanced specialist insight is often lost. And by relying on closed-source models, they miss out on AI’s full potential. Pienso’s intuitive interface cloaks powerful under-the-hood AI in an approachable, point-and-click interface – enabling you to train models freely and independently.

Available wherever you need us.

In the cloud…

Use your existing infrastructure and data sources. Pienso can be deployed on the major cloud provider of your choice – including GCP, Azure, AWS, and Gcore.

Or in your environment.

If you prefer, Pienso can also be deployed on-premises, so your deployment can align with your organization's IT and Security strategy.

Find out more about Pienso’s Security

With high performance…

Pienso is optimized to run on the market-leading and highest-performing AI/ML accelerators – Nvidia GPUs and Graphcore IPUs. We’ll help you decide which one best fits your needs.

Engineered for speed. As Pienso is deployed alongside your source data, inference time is minimized. We’re pushing the boundaries of accelerator hardware – even as you read this, we’re making Pienso work faster for you.

Technology by us. Knowledge by you.

No one model can do everything. That’s why Pienso helps you bring a garden of LLMs together to answer your most complex questions – with the flexibility to retrain and adapt them as quickly as your needs and aims evolve.